API to Search, Extract, Structure Web Data

Get clean data for your AI from any website and automate

your web workflows

Diagonal Sections

Using the rotation transform is how you might think to do it but I think skew is the way to go!

-

Start "research YC" workflow

-

Automate brand protection

Automate brand protection -

Research donors in NYC

Research donors in NYC Find local businesses

Find local businesses -

Analyze brand visibility

Analyze brand visibility

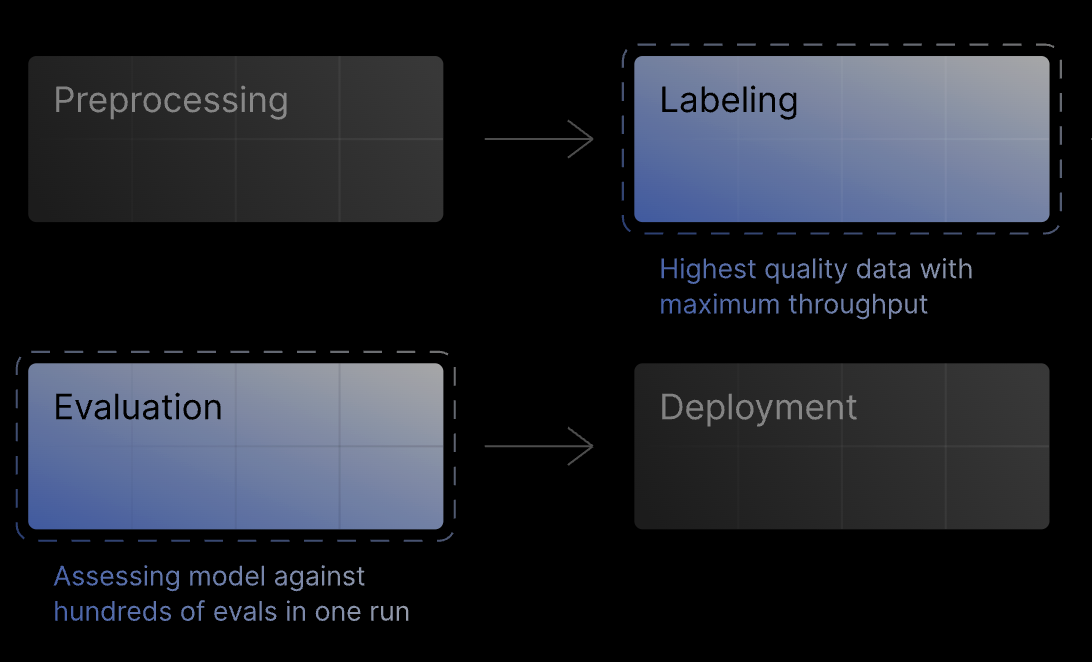

· Parsers - structured data

· Data router

· Automation engine

· Click, fill forms

· Distributed infra

· Map/Crawl

· VM sandboxes

· Batches API

{

"id": "request_56is5c9gyw",

"created": 1317322740,

"result": {

"markdown_content": "# Ex", "json_content": {}

"html_content": "<DOC>"

}

}

...and many more

Diagonal Sections

Using the rotation transform is how you might think to do it but I think skew is the way to go!

1import requests

2

3API_URL = 'https://api.olostep.com/v1/answers'

4API_KEY = '<your_token>'

5

6headers = {

7 'Authorization': f'Bearer {API_KEY}',

8 'Content-Type': 'application/json'

9}

10

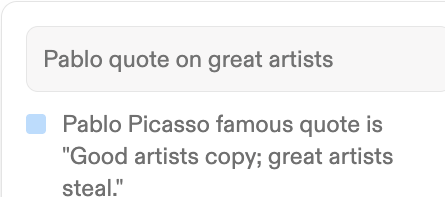

11data = {

12 "task": "What is the latest book by J.K. Rowling?",

13 "json": {

14 "book_title": "",

15 "author": "",

16 "release_date": ""

17 }

18}

19

20response = requests.post(API_URL, headers=headers, json=data)

21result = response.json()

22

23print(json.dumps(result, indent=4))1// Using native fetch API (Node.js v18+)

2const API_URL = 'https://api.olostep.com/v1/answers';

3const API_KEY = '<your_token>';

4

5fetch(API_URL, {

6 method: 'POST',

7 headers: {

8 'Authorization': `Bearer ${API_KEY}`,

9 'Content-Type': 'application/json'

10 },

11 body: JSON.stringify({

12 "task": "What is the latest book by J.K. Rowling?",

13 "json": {

14 "book_title": "",

15 "author": "",

16 "release_date": ""

17 }

18 })

19})

20 .then(response => response.json())

21 .then(result => {

22 console.log(JSON.stringify(result, null, 4));

23 })

24 .catch(error => console.error('Error:', error));1import requests

2

3API_URL = 'https://api.olostep.com/v1/crawls'

4API_KEY = '<token>'

5

6headers = {'Authorization': f'Bearer {API_KEY}'}

7data = {

8 "start_url": "https://docs.stripe.com/api",

9 "include_urls": ["/**"],

10 "max_pages": 10

11}

12

13response = requests.post(API_URL, headers=headers, json=data)

14result = response.json()

15

16print(f"Crawl ID: {result['id']}")

17print(f"URL: {result['start_url']}")1// Using native fetch API (Node.js v18+)

2const API_URL = 'https://api.olostep.com/v1/crawls';

3const API_KEY = '<token>';

4

5fetch(API_URL, {

6 method: 'POST',

7 headers: {

8 'Authorization': `Bearer ${API_KEY}`,

9 'Content-Type': 'application/json'

10 },

11 body: JSON.stringify({

12 "start_url": "https://docs.stripe.com/api",

13 "include_urls": ["/**"],

14 "max_pages": 10

15 })

16})

17.then(response => response.json())

18.then(result => {

19 console.log(`Crawl ID: ${result.id}`);

20 console.log(`URL: ${result.start_url}`);

21})

22.catch(error => console.error('Error:', error));1import requests

2

3API_URL = 'https://api.olostep.com/v1/scrapes'

4API_KEY = '<your_token>'

5

6headers = {'Authorization': f'Bearer {API_KEY}'}

7data = {"url_to_scrape": "https://github.com"}

8

9response = requests.post(API_URL, headers=headers, json=data)

10result = response.json()

11

12print(f"Scrape ID: {result['id']}")

13print(f"URL: {result['url_to_scrape']}")1// Using native fetch API (Node.js v18+)

2const API_URL = 'https://api.olostep.com/v1/scrapes';

3const API_KEY = '<your_token>';

4

5fetch(API_URL, {

6 method: 'POST',

7 headers: {

8 'Authorization': `Bearer ${API_KEY}`,

9 'Content-Type': 'application/json'

10 },

11 body: JSON.stringify({

12 "url_to_scrape": "https://github.com"

13 })

14})

15.then(response => response.json())

16.then(result => {

17 console.log(`Scrape ID: ${result.id}`);

18 console.log(`URL: ${result.url_to_scrape}`);

19})

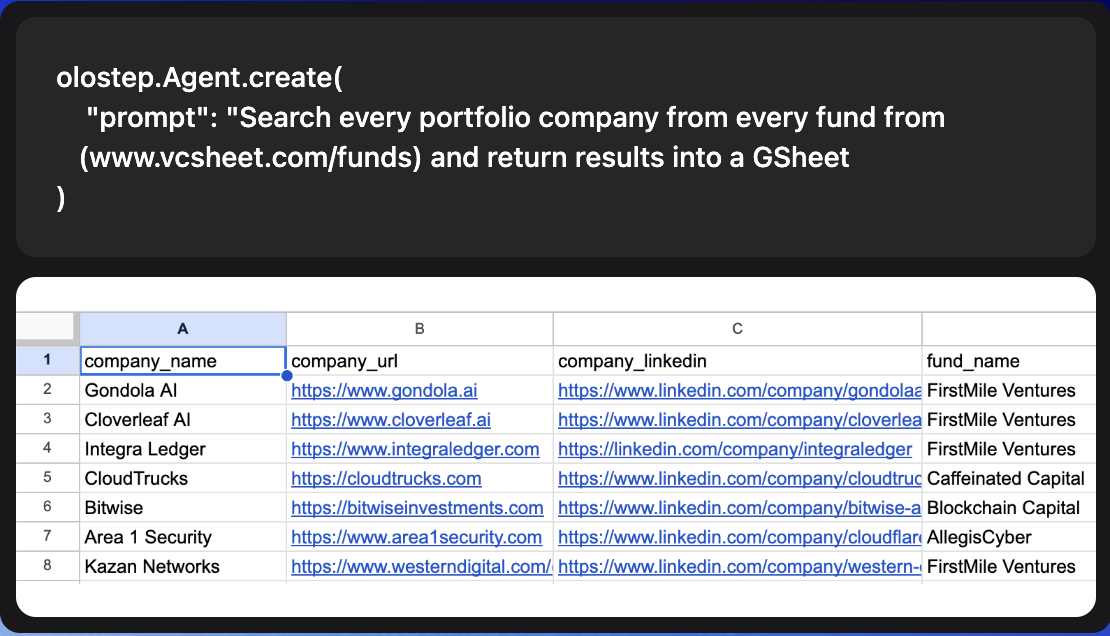

20.catch(error => console.error('Error:', error));1import requests

2

3API_URL = 'https://api.olostep.com/v1/agents' # endpoint available to select customers

4API_KEY = '<token>'

5

6headers = {'Authorization': f'Bearer {API_KEY}', 'Content-Type': 'application/json'}

7data = {

8 "prompt": '''

9 Search every portfolio company from every fund from

10 (https://www.vcsheet.com/funds) and return the results into a google sheet

11 with the following columns (Fund Name, Fund Website

12 URL, Fund LinkedIn URL, Portfolio Company Name, Portfolio

13 Company URL, Portfolio Company LinkedIn URL). Run every week

14 on Monday at 9:00 AM. Send an email to steve@example.com when

15 new portfolio companies are added to any of these funds.

16 ''',

17 "model": "gpt-4.1"

18}

19

20response = requests.post(API_URL, headers=headers, json=data)

21result = response.json()

22

23print(f"Agent ID: {result['id']}")

24print(f"Status: {result['status']}")

25# You can then schedule this agent1// Using native fetch API (Node.js v18+)

2const API_URL = 'https://api.olostep.com/v1/agents'; // endpoint available to select customers

3const API_KEY = '<token>';

4

5fetch(API_URL, {

6 method: 'POST',

7 headers: {

8 'Authorization': `Bearer ${API_KEY}`,

9 'Content-Type': 'application/json'

10 },

11 body: JSON.stringify({

12 "prompt": `

13 Search every portfolio company from every fund from

14 (https://www.vcsheet.com/funds) and return the results into a google sheet

15 with the following columns (Fund Name, Fund Website

16 URL, Fund LinkedIn URL, Portfolio Company Name, Portfolio

17 Company URL, Portfolio Company LinkedIn URL). Run every week

18 on Monday at 9:00 AM. Send an email to steve@example.com when

19 new portfolio companies are added to any of these funds.

20 `,

21 "model": "gpt-4.1"

22 })

23})

24 .then(response => response.json())

25 .then(result => {

26 console.log(`Agent ID: ${result.id}`);

27 console.log(`Status: ${result.status}`);

28 // You can then schedule this agent

29 })

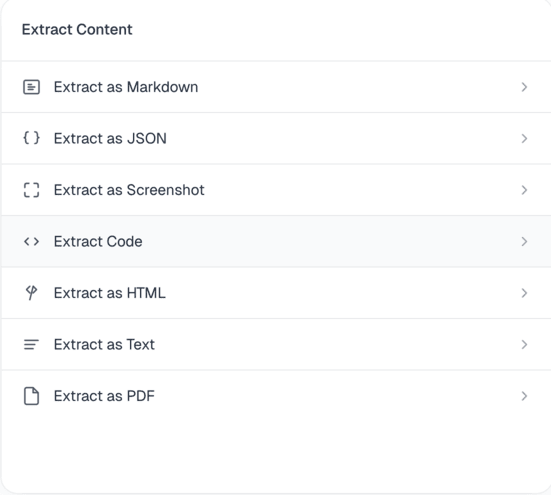

30 .catch(error => console.error('Error:', error));Get the data in the format you want

Get Markdown, HTML, PDF or Structured JSON

Pass the URL to the API and retrieve the HTML, Markdown, PDF, or plain text of the website. You can also specify the schema to only get the structured, clean JSON data you want

JS execution + residential ipS

Web-pages rendered in a browser

Full JS support is the norm for every request, as well as premium residential IP addresses and proxies rotation to avoid all bot detection

Crawl

Get all the data from a single URL

Multi-depth crawling enables you to get clean markdown from all the subpages of a website. Works also without a sitemap (e.g. useful for doc websites).

batch executions

Scale at another level

With Batch Executions, you can scrape 100k pages in around 5-7 minutes. You can then run up to 5 threads of Batch Executions and get 500k scrapes in the same amount of time. That's 1 million requests in around 15 minutes.

Pricing that Makes Sense

We want you to be able to build a business on top of Olostep.

Start for free. Scale with no worries.

Top-ups

Need flexibility or have spiky usage? You can buy credits pack. They are valid for 6 months.

Credit pack

Credit pack

Credit pack

Enterprise

Data tailored to your industry

Access clean, structured data that matters most to you, when it matters the most. Power search, deep resarch, AI Agents and your applications.

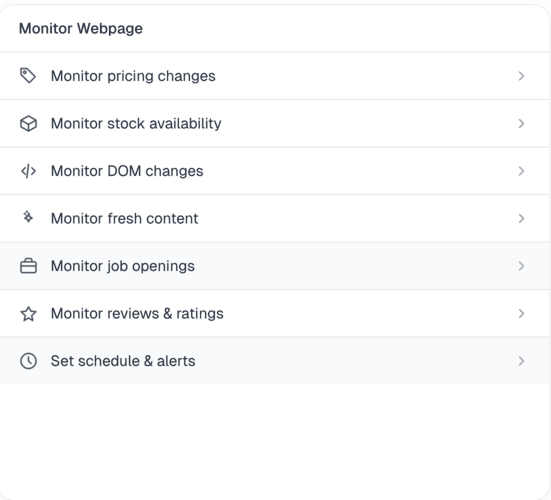

Deep research agents

Enable your agent to conduct deep research on large Web datasets.

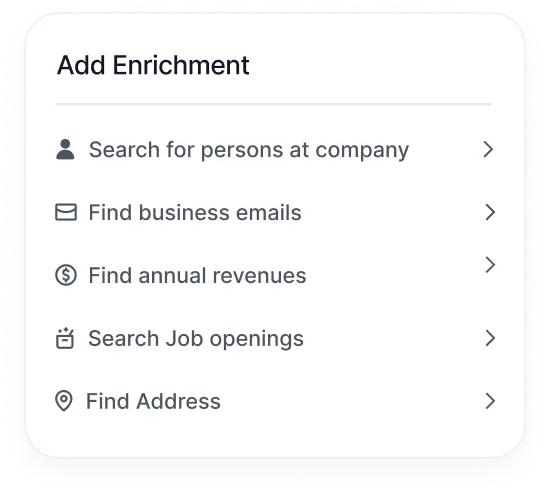

Spreadsheet enrichment

Get real-time web data to enrich your spreadsheets and analyze data.

Lead generation

Research, enrich, validate and analyze leads. Enhance your sales data

Vertical AI search

Build industry specific search engines to turn data into an actionable resource.

AI Brand visibility

Monitor brands to help improve their AI visibility (Answer Engine Optimization).

Agentic Web automations

Enable AI Agents to automate tasks on the Web: fill forms, click on buttons, etc.

Trusted by world-class teams

Discover why the best teams in the world choose Olostep.

Read more customer stories →

Olostep is the best!!! We automated entire data pipelines with just a prompt

Olostep has become the default Web Layer infrastructure for our company

Olostep works like a charm! And your customer service is exceptional

Olostep lets us turn any website into an API. Great product, great people

I highly recommend Olostep, great product!

We verify coupon codes at scale. Love Olostep. It works on any e-commerce

Olostep is the best API to search, extract, and structure data from the Web. Happy to be customers

We use /batches combined with parsers and it's magical how we can get structured data deterministically at large scale

Olostep allowed us to search and structure events data across the Web

Reliable and cost-effective API for working with data. Congrats on the cool product