Dataset work does not end when you publish “v1.” The job is to keep data usable as sources change, schemas drift, and real-world meaning evolves, which is the core of data curation as an ongoing practice.

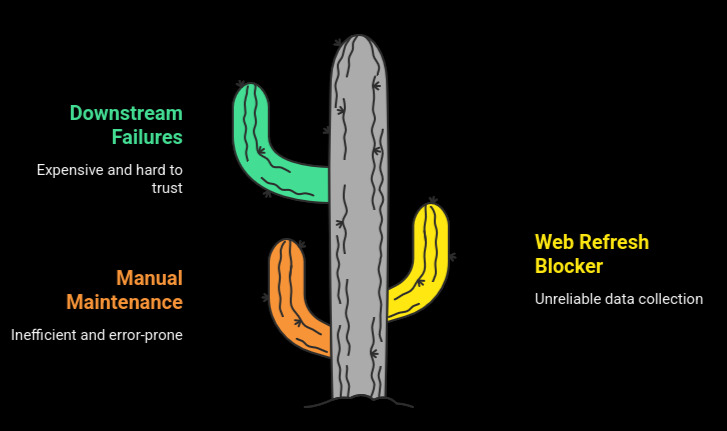

When teams underinvest in maintenance, failures rarely look dramatic at first. They show up as small inconsistencies, stale policies, missing metadata, and quiet label drift. Over time, those small issues compound into downstream failures that are expensive to debug and hard to trust, a pattern described in how data issues cascade through AI systems.

The fix is an agent-led maintenance loop, backed by a web refresh layer that can reliably re-collect source truth at scale.

This article covers why manual maintenance hits a ceiling, how agent maintainers work, the web-refresh blocker, and how to close the gap to keep maintenance continuous and reliable.

Why Manual Maintenance Stops Scaling?

Manual maintenance typically follows the same loop:

- Watch sources for updates

- Re-collect data and re-run pipelines

- Fix schema drift and broken transforms

- Dedupe, normalize, and re-label edge cases

- Update the docs and publish a new version

- Respond to failures after someone notices

This works when sources are stable, and volume is small. It breaks when change becomes constant.

Freshness debt is the first problem. Humans cannot track dozens of sources that update weekly, daily, or multiple times a day.

Silent decay is the second. Pipelines can “succeed” while quality drops: chunks get less relevant, metadata disappears, and documentation stops matching reality.

Workload growth is the third. Every new source format, consumer, and output variant adds another maintenance path. At that point, manual maintenance becomes reactive operations rather than controlled curation.

Once maintenance turns into firefighting, the obvious question is whether the repetitive parts can be automated without losing control. That is where agents enter.

Why Agent Maintainers Work Better?

Agent maintainers are not just “automation.” They change the operating model.

Instead of waiting for a complaint, an agent runs a continuous cycle: detect changes, propose updates, validate, and escalate edge cases. This matters because many quality problems are subtle and context-dependent, which is why benchmarks exist to test whether agents can spot and fix real curation issues.

The advantage is practical:

- Agents do the monitoring continuously, not in periodic sprints

- Agents apply the same checks every time, reducing one-off fixes

- Humans shift from doing refresh work to approving the risky changes

That last point is the key. The goal is not to remove humans. The goal is to reserve human judgment for the cases where judgment is required.

To make that work, the agent needs a reliable maintenance workflow.

What Agent Maintenance Looks Like in Practice?

A useful way to structure agent-led maintenance is this six-step loop:

- Observe signals: Diffs in source content, missing fields, distribution shifts, broken links, and schema changes.

- Diagnose likely issues: Stale sections, inconsistent labels, missing metadata, contradictions, or changes that look harmless but alter meaning, which is exactly the kind of failure mode emphasized in agent-focused curation evaluation.

- Refresh what changed: Targeted updates instead of full rebuilds.

- Validate: Rules, heuristics, quality scoring, and safety constraints decide what is publishable.

- Escalate Uncertainty: Humans review ambiguous diffs, schema remaps, or policy-sensitive content.

- Version and Audit: Every update ships with provenance: what changed, why, and when.

This loop is easy to describe. The part that usually fails is step 3, because refreshing the real world is messy. That is most obvious with web-based datasets.

The Web Refresh Bottleneck

Many curated datasets depend on web sources, including documentation, policy pages, changelogs, pricing, directories, public listings, and product catalogs.

Agents can decide what needs updating, but web refresh is often the weakest link:

- Pages are dynamic, and layouts change without notice

- Extraction varies across sites and across runs

- Rate limits and intermittent failures create gaps

- Scaling refresh to thousands of URLs becomes slow without a job model

- Agents waste cycles polling or re-running work to retrieve results

If web refreshes are unreliable, the entire agent loop becomes unreliable. That is why agent-led maintenance needs a web layer that behaves like production infrastructure, not a collection of fragile scripts.

The requirements are clear. The solution is to deliberately implement them.

Real-time Extraction for Verification Checks

Agents need fast spot checks. When a change signal appears, the agent should be able to re-fetch the URL, convert it to a stable format, and validate it immediately.

This is where single-URL scrapes that return clean Markdown/HTML/text/JSON and support dynamic content workflows naturally fit into the maintainer loop. The agent uses the scrape output as the “current truth” to compare against the last indexed version, then decides whether the change is significant enough to trigger a larger refresh.

That creates a reliable decision step before you spend resources refreshing an entire corpus.

Batch Refresh for Large-Scale Updates

Spot checks are not enough when a whole section of a site changes or when you maintain corpora at scale. Agents need a way to refresh many URLs with predictable behavior.

A reliable batch model has three properties:

- a fixed input list so nothing is “implicitly discovered” mid-run

- predictable completion behavior, even at large input sizes

- explicit output tracking so missing items are obvious

This aligns with a batch workflow for large URL lists, using a job-based model. It allows an agent to refresh thousands of pages as a single unit of work, then reason about completeness and failures at the job level rather than chasing per-URL exceptions.

At this point, the agent’s role becomes more like an operator: reconcile inputs and outcomes, then repair only what failed.

Run First, Retrieve Later

Agents should not block while a batch runs. They should submit work, move on, then retrieve results later.

That separation is the difference between “script automation” and “production operations.” It prevents wasted compute, reduces reruns, and makes retries cheap.

A clean pattern is: execute the job, then retrieve content when needed, which is how results are accessed via a retrieve step after the job completes. This enables agent workflows like:

- Submit batch

- Receive completion signal

- Retrieve outputs for validation and indexing

- Retry only missing or failed items

That pattern makes dataset maintenance resilient.

Schedules and Completion Triggers to Make Maintenance Continuous

Manual maintenance fails partly because it depends on human reminders. Agent maintenance fails when it still depends on polling and ad hoc runs.

To keep maintenance continuous, two features matter:

- Scheduled execution (daily, weekly, or triggered cadence)

- Event-driven completion, so downstream steps run automatically

An agent can run refresh on a cadence using scheduled runs built into the workflow, and then kick off validation and indexing only when jobs complete via webhook-based completion notifications.

This removes babysitting and keeps the agent loop stable over time.

A Workflow That Holds Up in Production

A practical end-to-end flow for a web-backed dataset looks like this:

- Detect changed URLs since last run (diff signals, sitemap deltas, or internal change logs)

- Run real-time scrapes on a small sample to confirm the change is meaningful

- Submit the changed URL list as a batch job

- Receive a completion event, then retrieve outputs

- Validate freshness, metadata completeness, dedupe integrity, and section structure

- Escalate only ambiguous diffs to a reviewer

- Publish a new dataset version with provenance and timestamps

This is the key shift: agents handle continuous operations, and humans handle edge-case judgment. The web layer provides the reliability guarantees that make the agent loop trustworthy.

Key takeaways

- Manual dataset maintenance hits a throughput ceiling because debt from freshness and silent decay grows faster than humans can respond.

- Agent maintainers work better because they run a continuous detect–refresh–validate loop, while humans focus on ambiguous or high-risk changes.

- Web refresh is the main bottleneck for web-backed datasets; without reliable scrapes, batches, and retrieval, even good agents produce brittle results.

- A production-grade workflow combines real-time scrapes, batch refresh, async retrieval, and scheduled runs so that agents keep datasets fresh by default.